The rapid evolution of Large Language Models (LLMs), Retrieval-Augmented Generation (RAG) and Model Protocol Context (MCP) implementation has led many developers and teams to quickly adopt and integrate these powerful technologies. Driven by the fear of missing out (FOMO) on potential competitive advantages and the eagerness to rapidly deliver value, teams often bypass essential controls, formality, and critical oversight. Although the rapid adoption of emerging technologies is common if not inevitable, it often precedes proper due diligence, exposing projects to serious intellectual property (IP), privacy, and security risks.

In this joint post, 0DIN and Ankura outline a proactive framework for mitigating the security and scalability risks that accompany LLM, RAG, and MCP initiatives. We sharpen the focus on the pivotal questions every team should ask before, during, and after development to increase the likelihood of a secure, scalable, and ultimately successful launch.

Developer or Implementor

-

Do I fully understand the LLM and data strategy I want to use to deliver value?

Evaluating the risk tolerance of the organization you're designing the system for is a foundational step in shaping its security posture and architectural decisions. Determine if they are comfortable using a hosted LLM provider (OpenAI, Claude, etc.) or require a self-hosted or contained LLM solution (Ollama, Amazon Bedrock, Azure AI, etc.). Clarify the capabilities needed from the LLM, and evaluate which type of LLM to use (open source, closed source or a custom fine-tuned model). A solid design pattern and clear answers to these considerations should be defined upfront.

-

What strategies am I employing to safeguard user privacy and data integrity?

Ensure you thoroughly understand the types of data the implementation will handle and any potential user inputs it should accept and deny. Adopt privacy measures appropriate for the types of data being handled, such as anonymization, pseudonymization, and data minimization to mitigate privacy and data security risks applicable to your business. Additionally, ensure secure API configurations and robust encryption practices are in place for data both in transit and at rest.

-

Am I proactively identifying and addressing potential security vulnerabilities? (prompt injection, command injections, sensitive data leakage, IAM, etc.)

Proactive threat identification requires more than ad hoc reviews or static checklists. It demands continuous, contextualized threat modeling tailored to your LLM/RAG/MCP architecture and specific use cases. Start by establishing strict input validation and output sanitization pipelines that are resilient to prompt injection, data exfiltration, and unintended command execution.

Leverage structured red teaming, adversarial testing, and LLM specific penetration testing to simulate real-world attacks. Integrate ongoing vulnerability scanning into your MLOps and DevSecOps workflows will become critical not as a periodic task, but as a continuous practice.

Proactively develop threat models when building AI systems. Security teams should analyze traditional Identity and Access Management (IAM) protocols for configurations especially when designing identities, permissions and access for LLM/RAG/MCP architecture. Careful consideration should be provided for identity management, surrogate authentication, session management, encryption, privilege escalation, etc.

-

Can We Proactively Monitor and Log for Malicious Use and Misuse in AI?

It's critical to implement proactive monitoring and robust logging systems to detect and address malicious use or misuse of your AI. These systems track granular system activity, user interactions, and AI outputs, providing a vital audit trail for identifying security breaches, data poisoning, or model manipulation, and enabling swift response and continuous security enhancements.

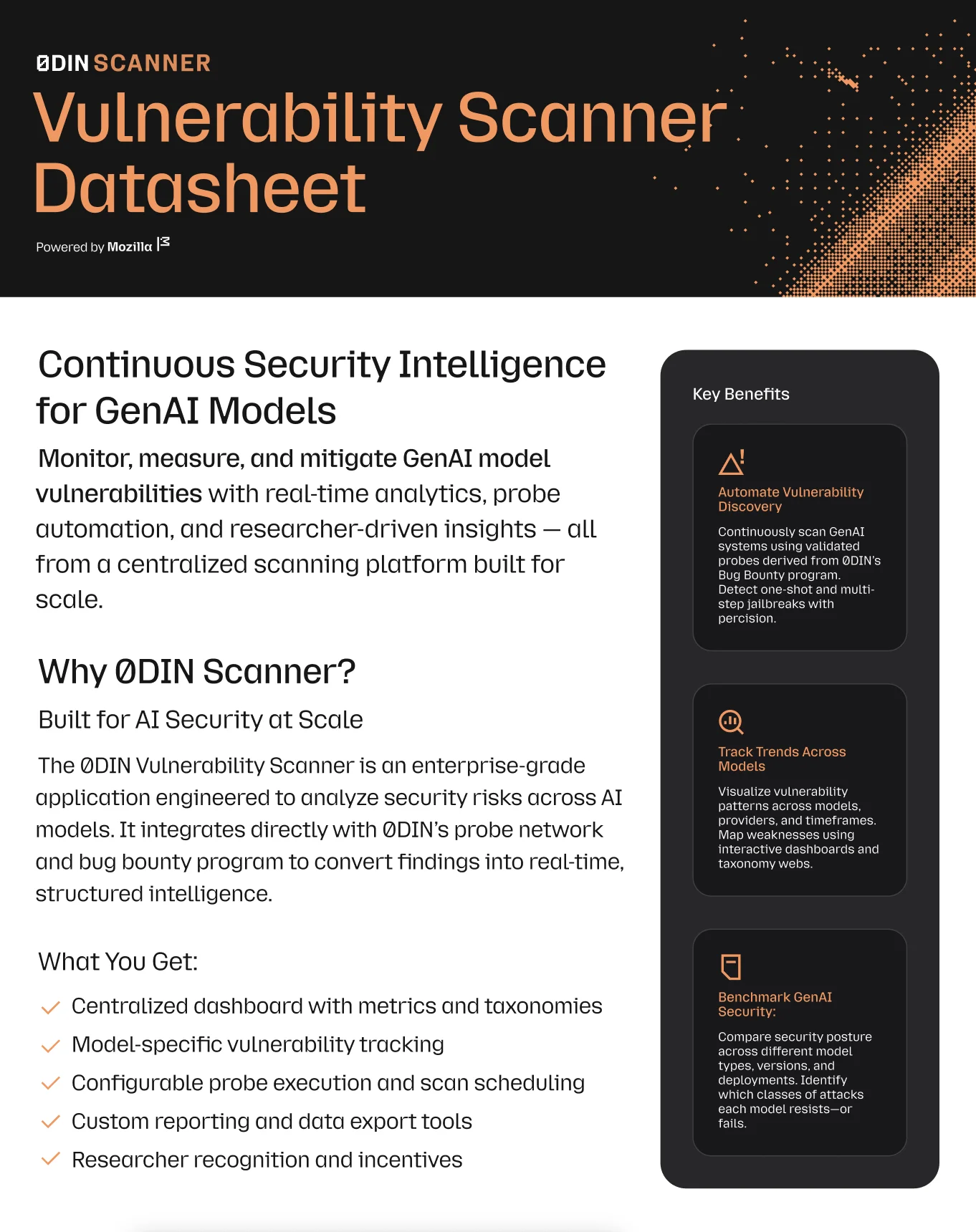

Explore AI security with the Scanner Datasheet

The datasheet offers insight into the challenges and solutions in AI security.

Download Datasheet

ODIN's Threat Feeds and Vulnerability Scanner

Earlier this week, 0DIN announced the launch of two new capabilities: Threat Feeds and a Vulnerability Scanner purpose built tools designed to help teams stay ahead of evolving risks in the LLM, RAG, and MCP ecosystems.

Our Threat Feeds deliver real-time intelligence on emerging exploit techniques, adversarial prompt patterns, and jailbreak strategies targeting modern AI systems. In parallel, the Vulnerability Scanner actively probes your deployed environments to identify jailbreak vectors, insecure configurations, and other critical weaknesses.

Ankura is recognized as a global leader in expert consulting and advisory services, distinguished by its multidisciplinary teams and end-to-end solutions that empower clients to protect, create, and recover value across critical inflection points worldwide. With over 2,000 professionals operating across more than 35 locations in 115+ countries, Ankura delivers tailored expertise in areas ranging from AI powered transformation and cybersecurity to risk and compliance. At the forefront of innovation, Ankura has launched proprietary platforms such as Ankura AI: its secure, private LLM suite and cutting-edge tools like NoraGPT, advancing both internal workflows and client-facing solutions with stringent data governance. In a strategic step to build a safer, more trusted AI ecosystem, Ankura is now collaborating with 0DIN, combining their deep domain knowledge and technological rigor to secure the future of AI through robust, standards-aligned consulting engagements. https://ankura.com/

-

How am I going to leverage agents or MCP based servers within my system?

Start by clearly defining the purpose and responsibilities of each agent or MCP implementation within your architecture. Outline how they will handle key functions such as data retrieval, task orchestration, and automation.

Ensure you assess the following areas:

Security boundaries: Define trust zones and how each component will authenticate, authorize, and communicate securely.

Scalability: Plan for how agents or MCP services will scale under load, especially in multi-tenant or high-throughput environments.

Error handling: Establish robust mechanisms for fault tolerance, retries, and graceful degradation.

Finally, evaluate the long-term impact of integrating agents or MCP servers into your system. Consider not only the immediate complexity and potential attack surface but also how these additions align with your platform’s future evolution and operational resilience.

Manager or Product Owner

-

Do I fully understand what data is being used in the system, how it's being used, and where it is going?

Make sure that there is a clear documentation on what data is used by your system, is produced by your system, and where it resides. This includes third party LLM usage of your user inputs and outputs, as well as any other service providers that developers may have leveraged to produce the system. Regularly conduct compliance audits, document licenses meticulously, and engage legal expertise to thoroughly review third-party agreements for any potential risks or liabilities.

-

In what ways are privacy and intellectual property protection considerations influencing product feature decisions?

Product feature decisions are increasingly shaped by the need to protect both user data and intellectual property. To address this, teams should adopt privacy and security by design principles early in the development lifecycle ensuring that data protection is not an afterthought but a foundational aspect of planning.

At the same time, intellectual property protection must guide how proprietary models, data, and system outputs are exposed or reused. This includes evaluating whether specific features could inadvertently leak sensitive model behavior, expose training data, or enable model extraction.

Collaborate closely with privacy, legal, and compliance teams to conduct privacy and IP impact assessments during feature planning and launch phases. These assessments should consider user risk profiles, geographic data regulations, and the potential for third-party misuse or reverse engineering.

By embedding these considerations into your product strategy, you reduce legal exposure and protect your core technology assets while building trust with users and regulators.

-

How effectively am I managing security and operational risks associated with third-party model or agent use?

Establish a systematic approach by conducting proactive, periodic risk assessments that cover MCP servers, AI agents, model performance, data leakage potential, and provider reliability. Engage security experts early to review architecture documentation as well as any third-party models or agents and define clear accountability structures and mitigation strategies, ensuring these risks are actively monitored and managed throughout the lifecycle of the system.

-

Are we transparently communicating our system’s capabilities, limitations, and associated risks?

Maintain transparency by clearly documenting and regularly updating stakeholders about the full capabilities, known limitations, and inherent risks of your LLM/RAG/MCP implementation. Provide this information through easily accessible documentation, user guides, and consistent product communication, fostering informed usage and maintaining user trust. Strive to ensure that the business fully understands the risks that your system may introduce as well as what security controls have been put in place to mitigate risks, and what additional controls may be required due to business needs and changes.

Executive Sponsor

-

Have we clearly defined our strategic objectives, business goals, and our risk tolerance for using LLM/RAG/MCP implementation?

Make sure your organization's strategic objectives and desired business outcomes for adopting AI technologies are clearly articulated and well-documented. There needs to be a balance between the urgency of leveraging AI against clearly defined risk tolerance levels to guide informed, consistent decision-making and investment by the organization. This strategic clarity should be communicated consistently across teams to align efforts and expectations effectively across both end users and developers of the system.

-

How are we ensuring ongoing compliance with intellectual property, privacy, and security regulations?

Establish a dedicated executive-level oversight group or committee tasked explicitly with continuously monitoring compliance with evolving intellectual property, privacy, and security standards. Engage your GC or outside counsel as well as your CISO to drive this continual analysis process for your organization. Allocate adequate resources for compliance tracking, regular audits, and ongoing regulatory training programs to maintain awareness and proactively address any emerging regulatory requirements or issues that may arise over time.

-

What measures are we taking to mitigate reputational and operational risks associated with AI?

Require comprehensive, regular risk assessments that specifically evaluate the reputational and operational risks associated with AI implementations. Utilize independent third-party audits to validate your security posture and compliance with internal and external standards. Integrate these security and compliance evaluations into your organization's broader enterprise risk management framework, ensuring clear accountability and systematic risk mitigation strategies.

-

How prepared are we to manage crises related to potential security incidents or data breaches?

Develop robust and well-defined crisis management and incident response frameworks that clearly outline roles, responsibilities, and communication pathways, particularly emphasizing senior leadership roles during crises. Regularly conduct realistic, scenario-based crisis response exercises to validate preparedness and responsiveness. Prioritize transparent, timely communication internally and externally to ensure rapid and coordinated stakeholder engagement.

-

How are we communicating our commitment to secure and responsible technology use to external stakeholders?

Proactively and clearly communicate your organization's commitment to privacy, security, and responsible AI practices through external channels such as corporate communications, annual sustainability and governance reports, public briefings, and direct stakeholder engagements. Provide detailed and transparent documentation outlining your system’s governance processes, security measures, regulatory compliance efforts, and risk mitigation strategies, thereby reinforcing trust and credibility with stakeholders and the broader community.

Final Thoughts

Suggest: In their urgency to realize both perceived and real value from LLM and RAG based systems, leaders and developers may unintentionally introduce significant risks to an organization. Prioritizing careful consideration of IP, privacy, and security issues at every stage ensures the minimization of negative outcomes while increasing the probability of successful outcomes that can drive fundamental changes in how organizations generate and deliver value to their customers.

While these systems can easily outclass traditional systems from a flexibility and impact perspective, it is best to engage in a measured approach of use case crafting, engineering, security, and legal/regulatory compliance.

Safeguard Your GenAI Systems

Connect your security infrastructure with our expert-driven vulnerability detection platform.

Ankura and ODIN

ODIN provides cutting-edge continuous monitoring solutions designed to scan any large language model (LLM) using either on-premise or SaaS-based continuous scanners. These scanners deploy threat intelligence probes across various models and providers on an hourly, daily, or continuous integration/continuous deployment schedule. With the use of interactive dashboards, heat maps, and model comparisons, organizations can efficiently assess and automatically address risks associated with generative AI.

This co-authored post by 0DIN and Ankura explores advanced approaches to AI testing and monitoring. For more details, please contact the Ankura cybersecurity team or the ODIN Team.